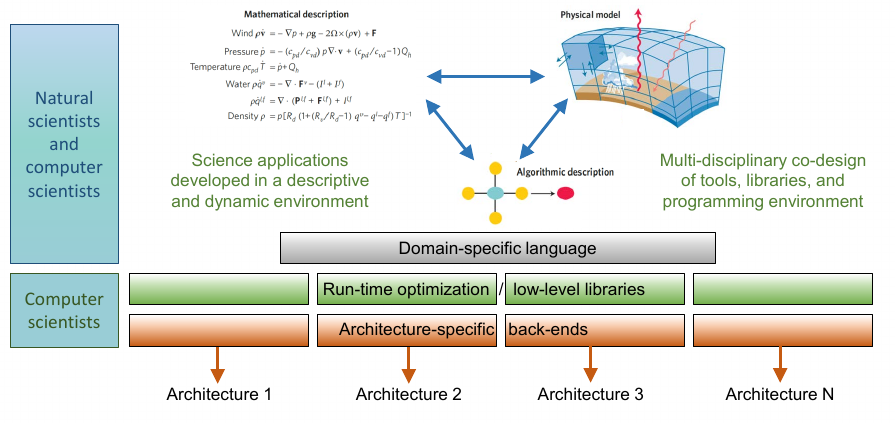

Topic 1: Development of ESM dwarfs and separation of concerns

The main goal of Topic 1 is to overcome scalability and portability issues of Earth System models on future HPC architectures. Following the concept of the seven dwarfs of HPC, the ESM dwarfs comprise the most important algorithms, mathematical kernels, and technical abstractions within the framework of numerical Earth system simulations. To bridge the gap between ever ongoing, multi-institutional, and science-driven optimizations for ESM codes and the necessity to perform these codes effectively on modern supercomputer architectures, Topic 1 encourages natural and computer scientists to apply the “separation of concerns” principle. Starting from the ESM dwarfs, architecture-specific optimizations are implemented and explored, by employing low-level libraries or code developments, typically written as code in a domain-specific language (DSL). In PL-ExaESM, specific attention is given to numerical performance aspects that are relevant for coupled model systems

Atmosphere

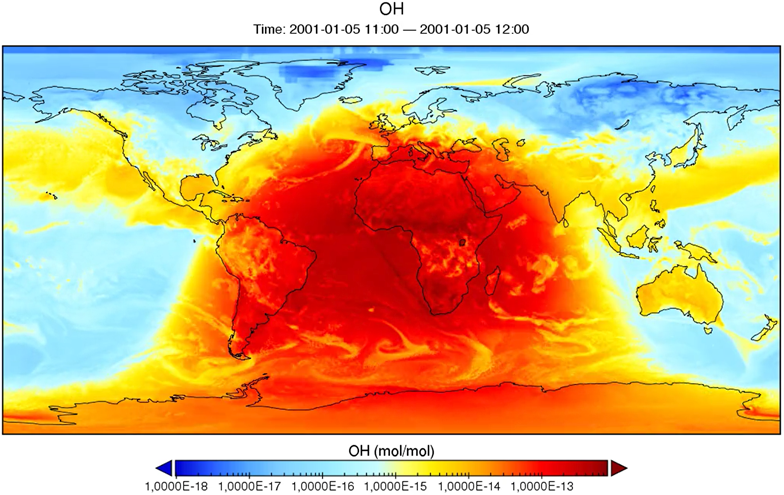

Atmospheric chemistry calculations consume a major part of compute time resources in ESM simulations, while bearing a high potential for parallelization. A prototype atmospheric chemistry dwarf (AC) is developed, which makes use of the MESSy infrastructure allowing basically all MESSy submodels to be run in the dwarf structure.

The AC Dwarf is used to improve and test the performance of the chemistry scheme on GPU architectures. Different approaches such as CUDA-C, CUDA-Fortran and OpenACC is explored.

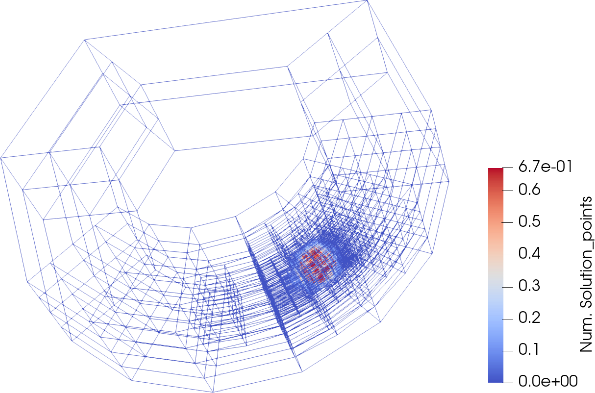

A new model to simulate advection and diffusion of atmospheric flows using dynamic adaptive grids with the t8code library is currently being developed at DLR-SC, allowing for variable grid types and resolutions. The boxmodel chemistry of the atmospheric chemistry dwarf is integrated to allow for chemistry calculations.

Terrestrial Systems

ParFlow, a model for variably saturated subsurface flow including pumping and irrigation, and integrated overland flow, is being optimized for modern heterogeneous supercomputers environments. The GPU support in ParFlow is hidden into an embedded domain-specific language (ParFlow eDSL), which results in good developer productivity, long-term codebase maintainability, and performance gain. The implementation leverages Unified Memory which is especially important for developer productivity and minimally invasive implementation, and a UM pool allocator which increases performance significantly on GPUs.

The ParFlow results demonstrate a very good weak scaling with up to 26X speedup over hundreds of nodes on a state-of-the-art supercomputer (JUWELS Booster) with NVIDIA A100 GPUs. The GPU support enables usage of the latest HPC hardware and makes moving from continental to global simulations feasible.

Ocean

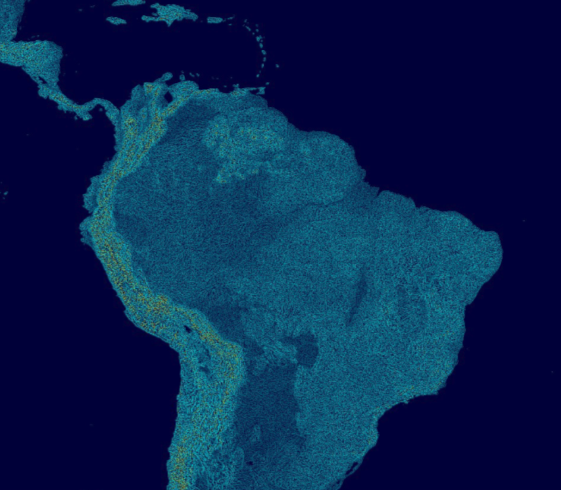

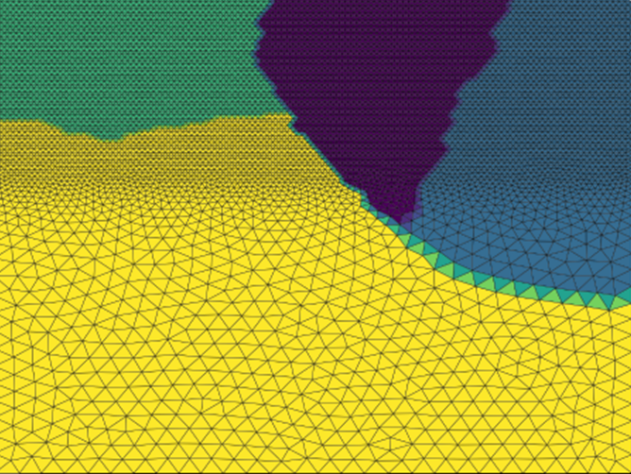

The aim is to improve exascale readiness for the Ocean model FESOM2. FESOM2 implements unstructured meshes with variable resolution, employing a finite volume method. The mesh flexibility allows to increase resolution in dynamically active regions, while keep a relatively coarse-resolution setup elsewhere.

While performing a simulation, FESOM2 has to take into account vast datasets of atmospheric forcings, stored as files in netCDF format. By developing an independent, unit tested module to distribute the load on the HPC system when reading and synchronizing the forcing data, the impact of huge forcing data sets on the simulation is minimized and workload is more equally spread on the file system and the HPC network. In addition, actual file reading could be offloaded and done asynchronously by using a dedicated C++ library. This results in a speed improvement of up to factor 11 when reading big ERA5 reanalysis forcing data sets.

Starting from a stand-alone version of FESIM, the Finite-Element Sea Ice Model used in FESOM2, work has started to compare the performance of the currently used explicit pseudo-time stepping (mEVP model, Link: ) with the VP model. The latter, combined with provenly scalable Schwarz-type preconditioning is both significantly faster and reduces the number of communication steps during the simulations.